For the last few days, I’ve been playing around with the new Claude Sonnet 3.7 model. I am not a developer. I’m barely even an amateur developer. I used to be the Program Manager for a coding bootcamp and I tried to learn to code. I got swept up in the whole “everyone should learn to code” movement. Mostly because we were marketing the bootcamp in this manner.

But I never could get the hang of it. I could write basic HTML and CSS but the logic of JavaScript eluded me. My brain just couldn’t wrap itself around the rationale.

But I’ve always wanted to learn to code. And Nat Eliason’s Build Your Own Apps course has got me closer than I ever have before.

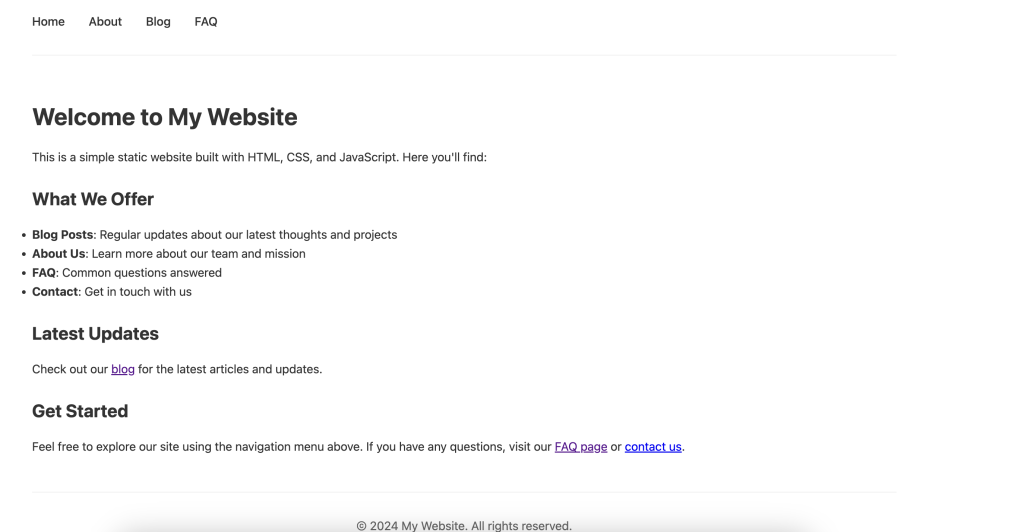

Over the weekend I built a simple Pomodoro app and a simple static website using Node.js. I’ve been using Cursor and running the newest version of Claude Sonnet (3.7).

What you are able to create using a few simple prompts is like magic. At General Assembly, we would spend the first few days teaching the concepts I was able to spin up in a few hours.

But there is still a learning curve here. And you do need to understand how code is structured to get the most out of these AI applications. The idea you can just feed the AI a screenshot and have it build out a website is mostly good marketing.

Yes, you can get the app to build a prototype. But it doesn’t work unless you understand how the code works and how to make changes.

AI coding tools like Cursor are excellent teachers. I was able to troubleshoot parts I couldn’t understand or get to work by copying the error message and prompting the AI to explain why it wasn’t working.

But I still had to make the changes. When I used the ‘Agent’ setting in Cursor to make the changes it wildly overcomplicated things and created unnecessary files and folders.

Claude Sonnet 3.7 is like a wild horse. You need to run it in straight lines to be useful.

I’m posting some of the useful prompts and insights I’ve seen here, to refer back to.

The big unknown with all these AI tools is how should we use these? Or rather, how could we use these. As Matt Webb points out, the best design patterns haven’t been invented yet.

The problem is there are so many AI products. Everything overlaps and it’s all so noisy – which makes it hard to have a conversation about what kind of product you want to build.

A large language model on its own isn’t enough to enable products. We need additional capabilities beyond the core LLM.

In Webb’s view, there are three capabilities that really matter:

- Real-time. Faster means interactive. Computers went through the same threshold once upon a time, going from batch processing to GUIs.

- RAG/Large context. Being able to put more information into the prompt, either using retrieval augmented generation or large context windows. This allows for steering the generation.

- Structured generation. When you can reliably output text as a specific format such as JSON, this enables interoperability and embedded AI, eventually leading to agents.

This leaves us with a landscape for tools looking something like this. Broadly, there are four main categories of AI tool.

These tools use AI in different ways. And users interact with the technology in four distinct approaches.

- Tools. Users control AI to generate something.

- Copilots. The AI works alongside the user in an app in multiple ways.

- Agents. The AI has some autonomy over how it approaches a task.

- Chat. The user talks to the AI as a peer in real-time.

You can start to understand how this breaks down into distinct tools. As Matt Illustrates:

Having broken these tools down into different categories, he illustrates a number of different UX challenges.

Generative tools will prioritise reliability and connecting to existing workflows. Live tools are about having the right high-level “brushes,”” being able to explore latent space, and finding the balance between steering and helpful hallucination.

Copilots need to be integrated into already existing apps. With workflows acknowledging the different phases of work. Also helping the user make use of all the functionality which might mean clear names for things in menus, or it might mean ways for the AI to be proactive.

Agents are about interacting with long-running processes: directing them, having visibility over them, correcting them, and trusting them.

Chat has an affordances problem. As Simon Willison says, tools like ChatGPT reward power users.

It’s like Excel: getting started with it is easy enough, but truly understanding it’s strengths and weaknesses and how to most effectively apply it takes years of accumulated experience.

For more, why I love the London coding scene. A bunch of these meetups are exploring these problems in real-time.

Leave a comment